XAI SBIR

Explainable Artificial Intelligence (XAI) SBIR

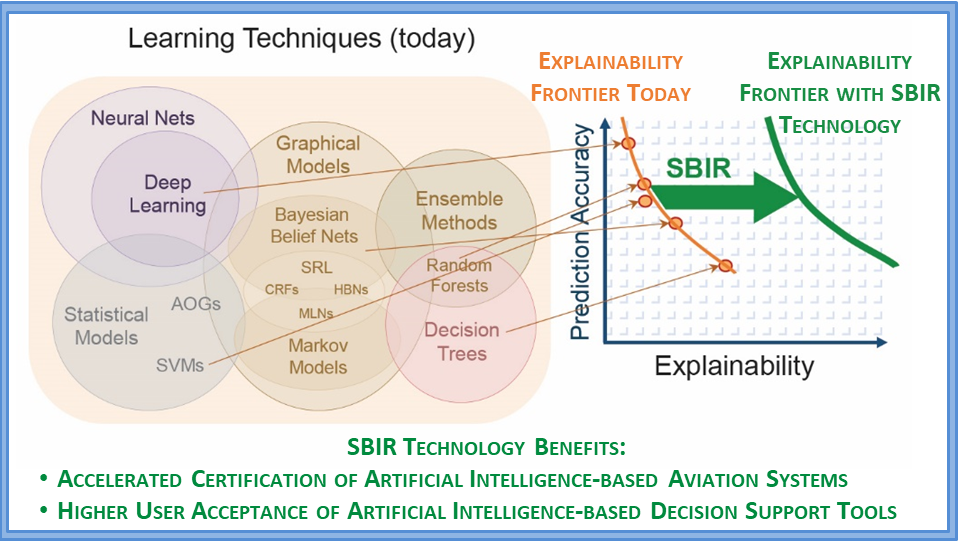

Increased reliance on autonomy technologies is a key enabler for future aviation operations, which will involve high traffic density and high diversity airspace operations including new emergent users (e.g., domestic supersonic flights, Urban Air Mobility, regional air taxi). Verification and validation (V&V), as well as user acceptance of these autonomy technologies, are key technical challenges. Artificial Intelligence (AI) algorithms are at the forefront of these autonomy technologies and are finding increased use in aviation applications. Most AI algorithms are perceived as black boxes whose decisions are a result of complex rules learned on-the-fly, and unless the decisions are explained in a human understandable form, the human end-users are less likely to accept these decisions.

ATAC is developing an approach for V&V of AI-based flight critical software systems by leveraging methods for making the learning in AI algorithms more explainable to human users. Our Phase I research develops a prototype V&V technology called EXplained Process and Logic of Artificial INtelligence Decisions (EXPLAIND). Our Phase I SBIR provides a proof-of-concept of the proposed explainability approach by applying EXPLAIND to the V&V of a NASA-developed ML-based algorithm for anomalous air traffic safety incident identification.